Features

The Eyes Have It

Pioneered by Rochester professor Michael Tanenhaus, the eyetracker is proving to be an exceptionally insightful way to explore language. By Scott Hauser. Photography by Elizabeth Torgerson-Lamark.

|

Michael Tanenhaus can remember thinking, “This isn’t working,” as he sat at a table in the virtual reality lab in the Computer Studies Building in 1993.

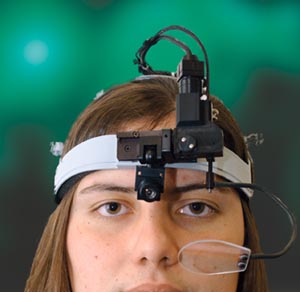

The professor of brain and cognitive sciences was wearing a helmet-like headpiece outfitted with a camera that was supposed to pinpoint where he was looking as he listened to commands to organize assorted objects on the table. Sitting at a nearby video monitor, Tanenhaus’s then graduate student, Michael Spivey ’96 (PhD), could see what his advisor couldn’t—a pair of crosshairs flitting across the screen as Tanenhaus turned his attention from cup to napkin to plate.

The two were part of a four-person team testing a new idea: whether technology designed to track eye movements could shed light on how humans comprehend language in “real-life” situations. Would the crosshairs on the monitor move in sync with the mention of each object’s name?

Ever the teacher, Tanenhaus’s next thought was, “How am I going to explain in a good, advisorly way that sometimes experiments just don’t work the way you would hope?”

But as he took off the headpiece and turned to Spivey, it was evident that no explanation was necessary.

“Spivey had this huge grin on his face,” Tanenhaus says.

|

| ON TRACK: “The kinds of questions that we are asking and getting answers to are the kinds of questions I couldn’t even have thought of asking 15 years ago,” says Tanenhaus, who first systematically recorded the connections between eye movements and language comprehension in “real-life” situations. |

On the monitor, each move of Tanenhaus’s eyes had been recorded down to the millisecond, an unexpectedly rich potential source of data for studying how humans process language. When he later showed such videotapes at professional conferences, there were audible gasps in the audience.

Says Tanenhaus: “It was clear that this was really promising.”

Eye-poppingly clear. Out of that initial trial has grown an entire line of research that has helped psycholinguists, psychologists, cognitive scientists, linguists, vision researchers, and others better understand how humans comprehend spoken language in everyday, natural settings.

First published in Science in 1995, the work and its influence is the subject of three separate, edited volumes (one co-edited by Tanenhaus) scheduled to be published this year.

In their forthcoming book The Interface of Language, Vision, and Action: Eye Movements and the Visual World, Michigan State University researchers John Henderson and Ferranda Ferreira credit Tanenhaus and his lab with ushering in a new era of exploring how humans understand language:

“It is an understatement to say that this technique has caught on,” they write. “In the last five years, psycholinguists have used eye movements to study how speech and language are understood, to learn about the processes involved in language production, and even to shed light on how conversations are managed. [The] second era of research combining psycholinguistics and the study of eye movements promises to be at least as productive as the first.”

Mary Hayhoe, professor of brain and cognitive sciences and herself an expert on how human vision and movement interact, says Tanenhaus’s work is especially insightful because the approach allows researchers to study language in a natural context. Tanenhaus, who now has a multiroom eye-tracking lab in Meliora Hall, conducted his original trials in a lab that Hayhoe shares with Dana Ballard, professor of computer science. “His work was quite revolutionary,” Hayhoe says. “It really started a whole new field.”

Spivey, who now is an associate professor of psychology at Cornell University, says the excitement was palpable.

“We really did feel as if we were coming up with a new way of measuring things in psycholinguistics,” he says. “They were heady times. I haven’t had lab meetings like that in a long time.”

Language—a highly structured system of communication, yet filled with ambiguity, dependent on context, and learnable by infants—has intrigued scientists and poets alike for millennia. But like vision, language is difficult to separate out as an object of study because the tools used to study it—that is, words, meanings, sounds—make up the very stuff of language itself.

By the middle of the 20th century, psycholinguists, those who study the connection between language and the brain, had divided into two broad camps: those who adhere to the “language as product” tradition, which argues that the best way to understand language is to understand the constituent parts that make it up; and the “language as action” tradition, which argues that language cannot be fully understood without considering the context in which it is used.

But both camps agreed that getting a picture of language in its full richness was limited by the artificial settings of most laboratories and experimental environments. They had been looking for a methodology that could capture some of the subtleties in the way people process language.

Intrigued by the challenge, Tanenhaus and his group of doctoral students began experimenting with the eyetracker, a technology first developed for use in psychology (one of its earliest applications was by advertising consultants who were curious about where eyeballs were pointing). The system uses miniature video cameras and computer software to follow the pupils of the eyes as they move with each change of focus. That information is fed to a computer, where crosshairs and a timecode are synchronized to match the movements.

|

| INSIGHT: Sarah Brown-Schmidt, a doctoral student in Tanenhaus’s lab (left), and undergraduate research assistant Rebecca Altmann ’04, a linguistics and American sign language double major, demonstrate the eyetracking technology. While Altmann manipulates images on her computer screen, the eyetracker follows where her eyes are looking. That data can be collected on the lab’s computers. |

“At the time, it was remarkable what we were seeing,” Tanenhaus says. “You feel a little as if you are inside someone’s head, seeing what they’re seeing. Of course, nothing is a direct window into someone else’s head, but when I first saw the videotapes, it was clear that this was going to be really exciting.”

While Tanenhaus was not the first to notice the connection between eye movements and attention, he and his team were the first to systematically record how the technology could be used to analyze language comprehension.

As people move their attention to a particular object—whether in conversation or not—their eyes naturally shift to bring that object into focus on a speck of nerves called the fovea in the sensitive macular area of the retina. The shift happens with blinding speed —it takes about 40 milliseconds—and occurs unconsciously many billions of times a day.

Tanenhaus, a specialist in the study of how humans deal with ambiguity in comprehending words and sentences, says that the eye needs another 150 to 200 milliseconds to program itself to move in response to a change in attention. For example, when people are presented with a picture of a beagle and a picture of a beaker, within a quarter second—about 250 milliseconds—of hearing the syllable “bea,” and the start of the next syllable, their eyes have figured out where to look and have begun moving in the right direction. If the second sound is “gle,” they look at the dog, and if that sound is “ker,” they look at the glassware.

For a researcher interested in how conversation works, knowing exactly when one speaker recognizes what the other is talking about provides an unimaginable perspective on language processing. The eyetracker, it turns out, is the perfect tool for “seeing” such previously invisible details.

“No research method solves all the problems, but for a whole range of topics, this has proved to be a major breakthrough,” Tanenhaus says.

Tanenhaus credits his interest in language, in large part, to his academic-minded family. Growing up in New York City and Iowa City, where his father was a political scientist and his mother surrounded the family with books and literature, he eventually enrolled at the University of Iowa to study speech pathology and audiology. On the verge of graduation, one of his mentors suggested that he continue his studies at Columbia University.

Originally planning a career in medicine, Tanenhaus thought he would give research a try and ended up getting a Ph.D.

“Psycholinguistics was a young, exciting field at the time,” he says. “It just got more and more interesting.”

Footprints

in the Field

When Michael Tanenhaus starts talking about the influence of his work, he quickly shifts his focus to the graduate students and postdoctoral fellows who have established their own academic and scientific careers.

For example, of the coauthors on the original Science paper, Spivey now is an associate professor of psychology at Cornell University, Kathleen Eberhard ’87 is an assistant professor of psychology at the University of Notre Dame, and Julie Sedivy ’97 (PhD) is an associate professor of cognitive and linguistic sciences at Brown University.

Rochester is one of the most influential centers of linguistic study, Tanenhaus says, because of the collaborative and interdisciplinary ties between brain and cognitive sciences, linguistics, vision science, and computer science.

“Because of the integrated study of things here, our graduate students and postdocs are among the most talented in the world,” Tanenhaus says. “As many top jobs have gone to people from Rochester as anywhere else in the country.

“There are Rochester footprints in all the major programs.”

—Scott Hauser

The gentle nudge from a committed advisor also has stayed with Tanenhaus, who in 2002 received the University’s award for excellence in graduate teaching. In nominating him, several of his current and former students, including many who are prominent researchers themselves, noted their former advisor’s selflessness in guiding them.

That trait also stands out for Sarah Brown-Schmidt, a fourth-year doctoral student who came to Rochester specifically to study with Tanenhaus. She’s worked with him on several studies on the interactive processes involved in conversation.

“He’s really good at helping me develop my own ideas rather than doing research on his, which is kind of unusual in a graduate program,” Brown-Schmidt says. “He helps me come up with clear ideas about the topics that I want to study.”

In any conversation with Tanenhaus he’s careful to credit the colleagues—whether graduate students, postdoctoral fellows, or other faculty—with whom he works. And he’s particularly gratified that the eyetracker has proven beneficial as an interdisciplinary tool.

At Rochester, Tanenhaus collaborates with computer scientists, linguists, and vision science researchers who also share an interest in cognition, and in his own lab, his group of 10 graduate students and postdoctoral fellows are exploring a variety of linguistic issues.

Within psycholinguistics, the eyetracker is helping to build bridges between the “language as product” and “language as action” camps, Tanenhaus argues, because it gives each viewpoint a way to study how language is processed in a detailed, timecoded way—regardless of the specific questions being explored.

“One can begin to integrate the traditions,” Tanenhaus says. “It’s a general tool that everyone can use.”

Making such a contribution is a thrilling prospect for a researcher who first became enthralled with the study of language because of what it says about the human mind.

“Language is, in many ways, one of the defining characteristics of humanity,” Tanenhaus says. “To me, there are two intriguing aspects of studying the mind and brain: vision and language. In some ways, language is the more mysterious.

“The other intriguing thing about language is that I can take an idea that’s in my head and put it into your head. We can create worlds with language.”

For scholars like Tanenhaus, those worlds are limited only by the questions yet to be asked.

“That’s what I like to do—look for the intriguing questions,” he says.

The eyetracker is proving to be an important part of that search.

“The kinds of questions that we are asking and getting answers to are the kinds of questions I couldn’t even have thought of asking 15 years ago,” Tanenhaus says. “I feel as if I understand language much better now than I did 15 years ago.”

Scott Hauser is editor of Rochester Review.