Rochester researchers will harness the immersive power of virtual reality to study how the brain processes light and sound.

A cross-disciplinary team of researchers from the University of Rochester is collaborating on a project to use virtual reality (VR) to study how humans combine and process light and sound. The first project will be a study of multisensory integration in autism, motivated by prior work showing that children with autism have atypical multisensory processing.

The project was initially conceived by Shui’er Han, a postdoctoral research associate, and Victoire Alleluia Shenge ’19, ’20 (T5), a lab manager, in the lab of Duje Tadin, a professor of brain and cognitive sciences.

“Most people in my world—including most of my work—conduct experiments using artificial types of stimuli, far from the natural world,” Tadin says. “Our goal is to do multisensory research not using beeps and flashes, but real sounds and virtual reality objects presented in realistically looking VR rooms.”

A cognitive scientist, a historian, and an electrical engineer walk into a room . . .

Tadin’s partners in the study include Emily Knight, an incoming associate professor of pediatrics, who is an expert on brain development and multisensory processing in autism. But in creating the virtual reality environment the study participants will use—a virtual version of Kodak Hall at Eastman Theatre in downtown Rochester—Tadin formed collaborations well outside his discipline.

Faculty members working on this initial step in the research project include Ming-Lun Lee, an associate professor of electrical and computer engineering, and Michael Jarvis, an associate professor of history. Several graduate and undergraduate students are also participating.

Many of the tools they’ll use come from River Campus Libraries—in particular, Studio X, the University’s hub for extended reality projects, as well as the Digital Scholarship department. Emily Sherwood, director of Studio X and Digital Scholarship, is leading the effort to actually construct the virtual replica of Kodak Hall.

The group recently gathered in the storied performance space to collect the audio and visual data that Studio X will rely on. University photographer J. Adam Fenster followed along to document the group’s work.

1

Members of the team begin the setup for audio and visual data collection. From left to right are Shui’er Han, a postdoctoral research fellow in Duje Tadin’s lab; brain and cognitive sciences major Betty Wu ’23; computer science and business major and e5 student Haochen Zeng ’23, who works in River Campus Libraries’s Studio X; and Victoire Alleluia Shenge ’19, ’20 (Take Five), who earned her degree in brain and cognitive sciences and is a manager in Tadin’s lab.

2

Han positions a dummy head equipped with a microphone in each ear. It will record sound from two perspectives, just as a human in the room would hear it.

3

Team members (clockwise from lower left foreground) Ming-Lun Lee, an associate professor of electrical and computer engineering and an expert in spatial audio, Wu, Shenge, electrical engineering PhD student Steve Philbert, and Han measure and record the location of the microphones on the Kodak Hall stage.

4

Philbert and Wu measure the distance between the binaural dummy head and a speaker.

5

Michael Jarvis, an associate professor of history, prepares a FARO laser scanner on the Kodak Hall stage. Jarvis is an expert on using 3D modeling software to digitally capture and create interactive spaces for users to virtually visit and tour. His digital history projects have included digitally capturing and analyzing archaeological sites modeling historical buildings in Bermuda and in Ghana.

6

Team members wait outside Kodak Hall during a visual scan. Placing a single FARO laser scanner at various points around Kodak Hall, the group made eight scans, each taking approximately 12 minutes, and together capturing roughly one billion data points that will be used to generate a 3D model of the hall interior.

7

Jarvis takes photos around the hall that will be stitched together to make a complementary photogrammetry model of the space, augmenting the laser scans in areas where data points are sparse or missing. Photogrammetry models, in which 2D images are used to extract information to produce a 3D model, are less accurate than 3D models made with laser scans, but they have higher resolution.

8

Philbert, audio and music engineering major Joey Willenbucher ’23, and Tadin reposition a speaker. The speaker must be horizontally aligned with the microphone to best capture audio data.

9

Shenge uses a laser rangefinder, which generates the orange dot on the paper held by Tadin, to determine the optimal distance between a speaker and the binaural dummy head.

10

Blair Tinker (right), a research specialist for Geographic Information Systems Digital Scholarship at the River Campus Libraries, and Zeng are among those at Digital Scholarship and Studio X who will turn the visual and audio data into a VR replica of Kodak Hall that participants in Tadin’s study will use.

Says Emily Sherwood, director of Studio X and Digital Scholarship, Tadin’s project is “a prime example of how research using or on extended reality requires expertise and support from a broad range of disciplines. Having a hub like Studio X helps facilitate those connections because we know what different faculty are working on and how they might be able to work together.”

Studio X offers students, faculty, and staff equipment necessary for a range of projects, from creating a VR replica of Kodak Hall, to holding VR game competitions.

Read more

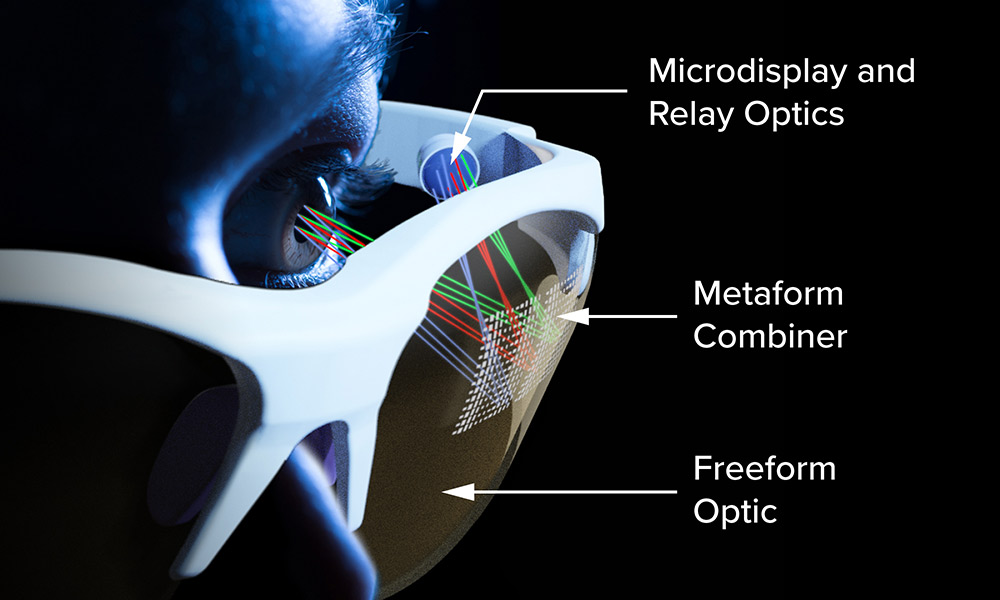

A new way to make AR/VR glasses look like regular glasses

A new way to make AR/VR glasses look like regular glassesRochester researchers are combining freeform optics and a metasurface to avoid ‘bug eyes’ in AR/VR glasses and headsets.

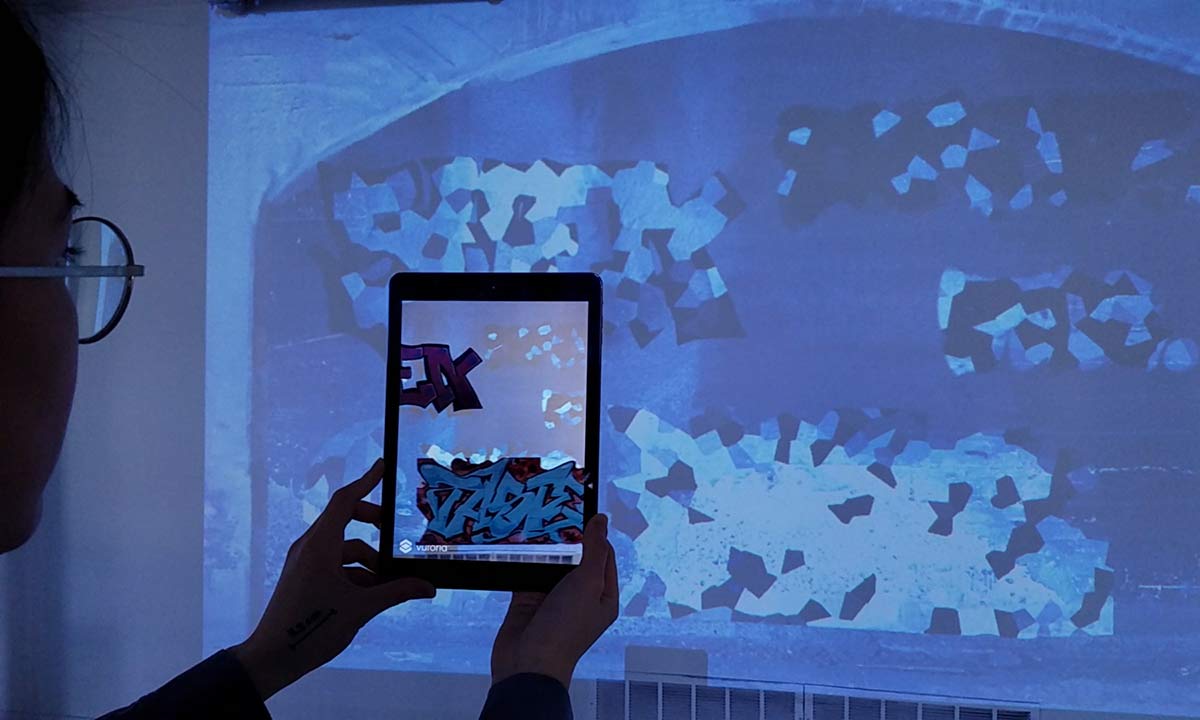

Seniors show beauty of urban art with augmented reality

Seniors show beauty of urban art with augmented realityFour Rochester students share graffiti art in abandoned city subway tunnels through augmented reality.

Studio X, technically

Studio X, technicallyStudio X’s existing technology library was built to grow as the University community’s needs and the extended reality (XR) market evolve.